5 things I wish someone had told me before I tried self-hosting a local LLM

Summary

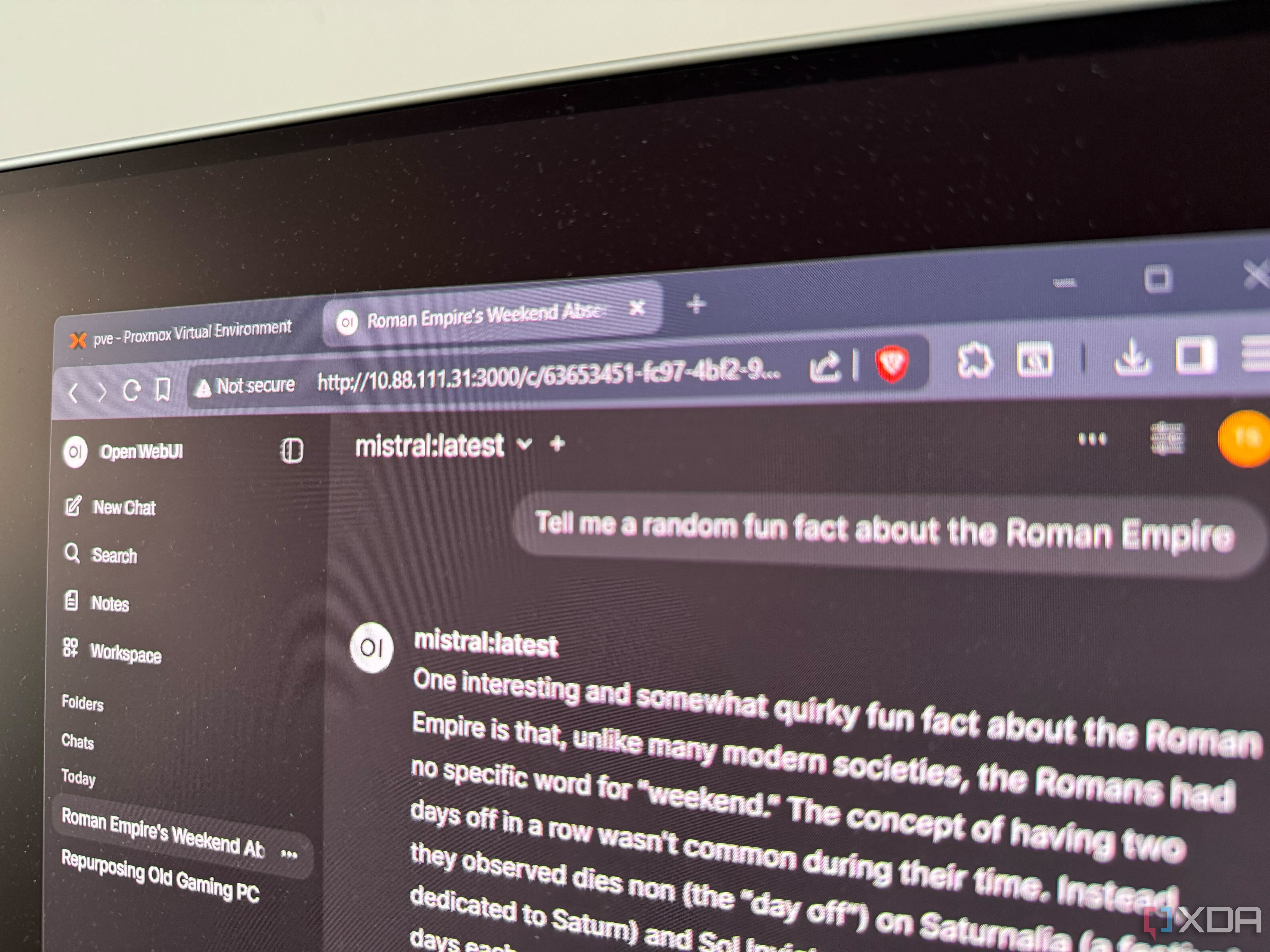

Self-hosted AI has evolved from a novelty to a practical tool, enabled by quantized models and plugins. Users can transform old PCs into private AI assistants, enhancing privacy and functionality without relying on external servers.

Key Insights

What hardware is needed to self-host a local LLM on an old PC?

Self-hosting LLMs on old PCs is feasible using quantized models that reduce memory and compute needs, allowing even consumer GPUs or CPUs to run them effectively as private AI assistants, though performance depends on model size and hardware specs like GPU VRAM.

What are the main challenges of self-hosting a local LLM?

Key challenges include high complexity requiring technical expertise for setup and maintenance, significant upfront hardware and electricity costs, and security risks from vulnerabilities if not properly secured, despite benefits like privacy and offline use.